Warp-Speed Wednesdays

Your Must-Read Tech Updates: Boston Dynamics Atlas 2.0, Tesla Bot,RoboTaxi,NVIDIA Robot Model GR00T and more

Robotics - A Game of Thrones

Keep your eyes peeled! I’m about to drop a fresh chapter in the Hitchhiker’s Handbook that dives into the Dreadnaught — AKA the machine that builds the machine. Prepare to discover why the future of commercial robots won’t just be a fancy vacuum or a mechanical arm but capable humanoids. Below is only a brief glimpse into the robot revolution.

Tesla Bot: Expect the Unexpected in 2024

In 2023, Tesla achieved significant advancements with its humanoid robot, Optimus aka Tesla Bot. Transitioning from the Bumblebee/cee prototype to the more Apple-esque Optimus Gen-2, the robot demonstrated improved locomotion and human-like gait.

Tesla is training their end-to-end neural network using low-latency teleoperation system. The upgraded Optimus Gen-2 featured an articulated neck, tactile hands, and free moving hips, like it had a hot yoga session.

Boston Dynamics: Plans to Keep Its Throne

Boston Dynamics retires the famous Atlas 📽 which has inspired a generation of engineers and robot dreamers for almost a decade.

BD/Hyundai unvieled Atlas 2.0 by having it rise like the undead chick from the Ring crawling through your tv. The robot's hardware is a significant shift from Marc Reibert's previous preference for hydraulic actuation for electric actuators. This transition reflects a broader industry trend toward robust electric actuators, driven by the expectation that they will offer a more cost-effective, compact, and scalable mechanical design while delivering greater force relative to the robot's weight.

The new Atlas targets dull, repetitive, dirty, and dangerous tasks. Inspired by Tesla's strategies, Hyundai will deploy the robot within its car manufacturing facilities. This move aims to cut manufacturing costs and offer a real-world setting to train the robot away from the critical eyes of the public.

Also, expect BD to move away from hand-coded control algorithms, like model predictive control (MPC), and embrace deep neural nets. Here is a conference seminar from BD on MPC vs reinforcement control algorithms. 📽

No, this isn’t a Drake concert. This is NVIDIA’s GTC!

After 20+ years of deep work, NVIDIA leads the AI hype with its lead vocalist Jensen. And wow, did they have a performance this year!

I recommend watching Jensen’s keynote 📽 and exploring the endless catalog of seminars and talks about how deep learning and GPUs are and will be shaping tech, biologics, gaming, and robotics.

The most exciting aspect — yea, I'm biased —was that NVIDIA has thrown its hat into the robotics world, and Dr. Jim Fan (ex-OpenAI employee) is leading the charge to develop a foundation model for humanoid robots. They partnered with an extensive list of robotics companies to develop this model (1X Technologies, Agility Robotics, Apptronik, Boston Dynamics, Figure AI, Fourier Intelligence, Sanctuary AI, Unitree Robotics and XPENG Robotics).

One Model to Rule Them All: Remember, research from Google and other institutions 📽 revealed that neural network models trained on data from a diverse array of robots consistently outperformed models trained on a single robot. The model is robust across all robot shapes and sizes; it doesn't discriminate against legged, wheeled, flying, or arm robots.

This discovery has significant implications for scalability and the potential to develop a single foundational model that applies across various robotic platforms. This concept of a universal model could revolutionize the integration and application of neural networks in robotics, dramatically improving efficiency and adaptability in the field.

Elon Double Downs on Robotaxi Even with Internal Opposition

Let’s not get pulled into the black hole of Elon drama. You can pick that up at any news outlet. Elon has turned his focus back on Tesla and Robotaxi. He has seen enough from Full Self Drive v12 and the AI industry to postpone the value vehicle for an autonomous vehicle.

Jim Fan speculates that FSD v13 will use Elon’s other AI company multimodal model, Grok-1.5V, to solve edge cases using an LLM reasoning engine. This will require rapid inference, but Wayve has showcased that it could be feasible. 📽 I believe these Vision Language models will be a huge step towards AIs that understand the physical world.

This is a bold bet, but if Tesla is first to market with a capable widespread autonomous vehicle that would dramatically increase the value of the fleet and the company. Elon is known for not shying away from bold visions even when his employees think he is too aggressive.

Let me spare you 30 minutes and summarize Elon’s lecture at Autonomy Day 2019:

Integrated Development: Tesla distinguishes itself by developing both hardware and software in-house.

Data-Driven Improvements: Tesla's ability to collect extensive data from its fleet of over a million connected vehicles is a significant advantage. This "fleet learning" approach enables Tesla to refine its AI through real-time feedback from real-world data. Understanding the insanity of humans is stupidly difficult, so set up the cameras and let the AI watch and figure it out.

Economic Impact of RoboTaxis: Musk teased that a RoboTaxi could generate about $30,000 yearly in gross profits, significantly increasing a vehicle's utility and efficiency. It is still too early to acquire a business loan for your robot taxi service, but it is hard to bet against Elon and feel comfortable that you will win.

AI - New Year, Same Thrill

AI Agents Coming… But, We Are Early

In a riveting talk, AI expert Andrew Ng introduced the concept of agentic workflows in AI systems, marking a shift from traditional instant response models to more dynamic, iterative processes. Here’s a summary of what he shared:

From Simple Responses to Complex Interactions: Traditionally, AI models respond to prompts with immediate answers—think of it like writing an essay in one go, without any edits. Ng proposed a more nuanced approach called agentic workflows, where AI models, like humans, draft, review, and refine their outputs repeatedly. This method mimics human-like iterative behaviors, enhancing the quality of AI-generated content.

Case Study Success: Ng demonstrated the power of agentic workflows with a coding benchmark, showing that older AI models could outperform newer ones when using these advanced workflows. This illustrates that the method of interaction with AI can be as important as the technology itself.

Emerging Design Patterns: Ng highlighted several innovative design patterns that are reshaping how we interact with AI:

Reflection: Allowing AI to critique and improve its own work.

Planning and Collaboration: Employing multiple AI agents to tackle complex tasks collectively, simulating a more human-like workflow.

Enhanced Tool Use: Encouraging AI to utilize external resources, expanding its capabilities.

The Road Ahead: Adopting agentic workflows can lead to significant productivity boosts and more robust AI applications. Ng’s insights suggest that the future of AI lies in creating systems that can operate with a degree of autonomy and sophistication, bringing us closer to the realization of artificial general intelligence (AGI).

Karpathy Envisions Jarvis - An LLM Operating System

Andrej Karpathy envisions transforming Large Language Models (LLMs) into dynamic operating systems capable of performing a wide range of computer operations. This innovative approach integrates state machines into the decoding process, allowing LLMs to execute and interact with code in real-time. LLMs autonomously refine their code generation, reducing the need for manual corrections.

This vision expands the potential applications of LLMs, empowering them to manage tasks handled by an operating system, such as file navigation, internet searches, and database queries, paving the way for AI to become an integral part of everyday computing environments.

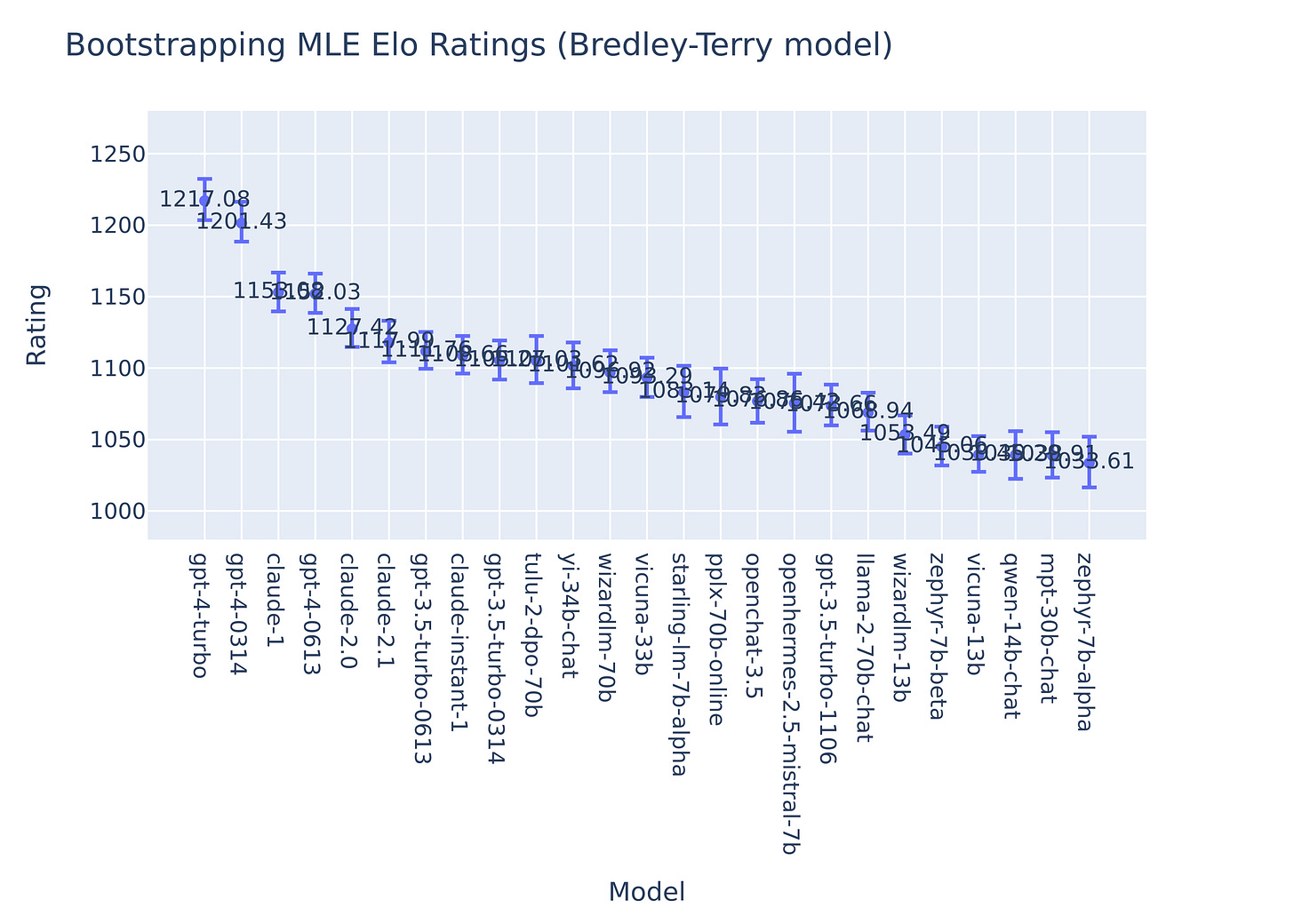

More Models, But OpenAI and GPT-4 Remain King

Meta and Yan LeCun Keep Cooking: Open Sourced Llama 3!

Llama 3 70B is the best open source model with the 405B parameter model still to come.

Stanford’s AI Index Report 2024 in case you don’t have anything to do on your Wednesday night.